DIM appears to succeed but actually fails

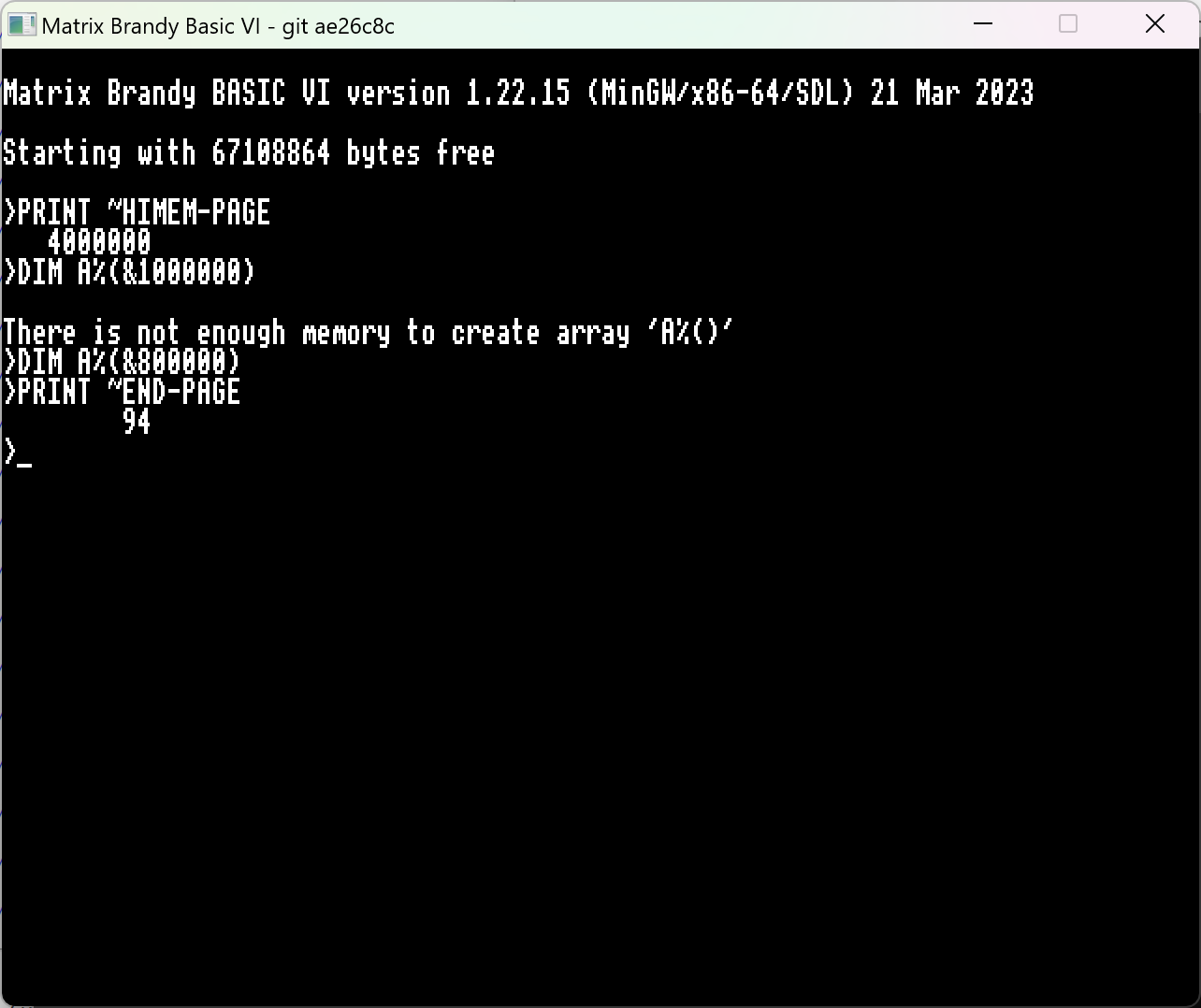

In the screenshot below the first DIM fails (as expected) because there is not enough room. But the second DIM (which should succeed, and indeed appears to have) seemingly hasn't actually allocated any heap, judging by the value of END. Something wrong here?

0

Comments

-

I will take a closer look at this.

Edit: It works if you CLEAR before retrying. According to LVAR it leaves a stub array behind, when allocated for some reason END isn't updated. And doing a chain of these the allocations seem to change! So there is definitely something weird going on. Perhaps the variable allocation being recorded prior to checking whether the array will fit isn't working quite right.

More investigation required.......1 -

-

In Brandy, it's being erroneously handled as a local array so is allocated on the stack rather than the heap, which gets messed up because it isn't local. Perhaps you have the same problem?0

-

-

I am sorry to hear that.

As a thought, can you port your 32-bit assembler to C so all BBCSDL/BBCTTY implementations are then built from the C source? I accept that would result in a performance hit on 32-bit x86 builds.

Back to the DIM issue, my fix is to reverse the insertion of the variable reference block into the memory structure, and if it can be safely removed from the heap, then doing that too.

While this wasn't a problem with my DIM HIMEM code, as it used malloc() to request memory, I'm also cleaning that a bit so CLEAR HIMEM will delete the variables rather than leaving a stub behind. And yes, I also appreciate that if it is unable to clear the variable description block from the heap, about 76 bytes are leaked.0 -

It's a massive hit, something like doubling execution time and a similar increase in code size.I accept that would result in a performance hit on 32-bit x86 builds.

It seems to me that the future is 64-bits (only Windows, of the major operating systems, still supports 32-bit apps 'transparently') so I can't get excited about not fixing it in 32-bit x86 builds.

The only way the 'feature' could have any practical negative impact, that I can think of, would be if a program tried to discover the maximum size of array that can be allocated, by trapping errors and iteratively repeating the DIM until it doesn't fail. Something like this:S% = &1000000 ON ERROR LOCAL S% -= &1000 DIM A%(S%) RESTORE ERROR

But in practice one couldn't use that technique because there's no way of knowing how much free memory is left after the successful allocation, it could easily be too little for the rest of the program to run!

Sounds complicated.Back to the DIM issue, my fix is to reverse the insertion of the variable reference block into the memory structure, and if it can be safely removed from the heap, then doing that too.

0 -

In Brandy, not so much. Variable reference blocks are held in a hash table which points to linked lists. So it's just a matter of stepping through the list associated with the hash, and where n item contains the reference to the one to remove (item+1), just replace the reference to (item+1) with that of (item+2), then if possible, free the heap space.Richard_Russell wrote: »0 -

Out of interest, where is the hash table stored? Is that also on the heap, or does it have its own separate area of memory?Variable reference blocks are held in a hash table which points to linked lists.

I've checked the behaviour of my BASICs, and the failure isn't caused by the array seeming to be LOCAL (I'm still not sure what it is caused by, however). The only situation in which the 'stub array' would appear to be LOCAL is if the failing DIM was itself allocating a local array on the stack.

In my BASICs DIM is by far the most complicated of all the statements! This is largely because it is used not only to allocate global and local arrays, or to allocate a block of memory on the heap or the stack, but also to allocate structures (both global and local) and arrays of structures!

So any changes in that region are potentially very risky.

0 -

As far as I can see the 'problem' in my code is rather more straightforward. If a DIM fails with a 'DIM space' error it leaves the array descriptor (stub) on the heap but doesn't advance the heap pointer ('END') past it, thereby leaving the heap in an inconsistent state.0

-

You should find this issue resolved on the latest nightly build.0